Weeks 10& 11: Lean Validation. Testing Multiple Assumptions Per Test, Test Documentation, Extracting Insights

Video Walkthroughs & Concrete Examples from Vera Fields Test Documentation

TLDR & Content

Hitting the Viable mark: Even minimal tests need enough quality to give reliable insights – don't let a shoddy setup mask a good idea

Tying success metrics to riskiest assumptions for clean analysis

Tallying the results

Focus on extracting true, surprising learnings, not just surface-level feedback

Attaching weight to the evidence. When dealing with qualitative tests, evidence weight is naturally low (hypothetical, self-rapportage)

Calm decision-making after patterns emerge. Resist knee-jerk reactions to single pieces of feedback

I’m publishing my test documentation on Notion (Outline; Assumptions; Success metrics; Results)

Recap: ICP, #1 opportunity, ideas, assumptions

First, a quick recap:

ICP: small field service teams with fewer than 20 technicians in English speaking countries (side note: I’m thinking of switching persona from “field service manager” to “owner”)

#1 opportunity: It needs to be extremely simple and low overhead for field technicians to capture service notes

Ideas:

#1 Customizable forms /intuitive form builder . Allow back office staff to easily create custom forms/checklists, so that field engineers can use the engineer mobile app to fill out the exact right forms (background: We know that most comprehensive Field Service Management suites

#2 Predefined answer blocks. It should be possible to configure some predefined answer blocks in the admin backend - pieces of text that will often be repeated. These become available as answer blocks in the free text fields (e.g. “I checked the refrigerant” “I tightened the bolt”, “etc.”

#3 Photo annotation (drawing on pictures, adding text)

Some of my riskiest assumptions for idea #1

The value proposition of "exact right forms" is strong enough to drive adoption and willingness to pay among the ICP.

Current, failing service documentation processes are a severe, recurring pain point (hair on fire)

We can find a scalable, repeatable customer acquisition channel (offline events; partnerships; SEA/paidads; content; Mobile app store/playstore?)

Technicians will prefer to use the Vera Field mobile app over how they do things today

While I've only detailed some of the assumptions for Idea #1, we also identified assumptions for the other two ideas. This significant overlap means testing a single core assumption can often invalidate or validate multiple ideas simultaneously.

Choosing Minimum Viable Tests

I want to validate my riskiest assumptions as cheaply and quickly as possible. I need to invest time to de-risk them due to their high importance, but I don’t want to waste resources on robust tests (e.g. building an MVP) on something that’s still so unsure.

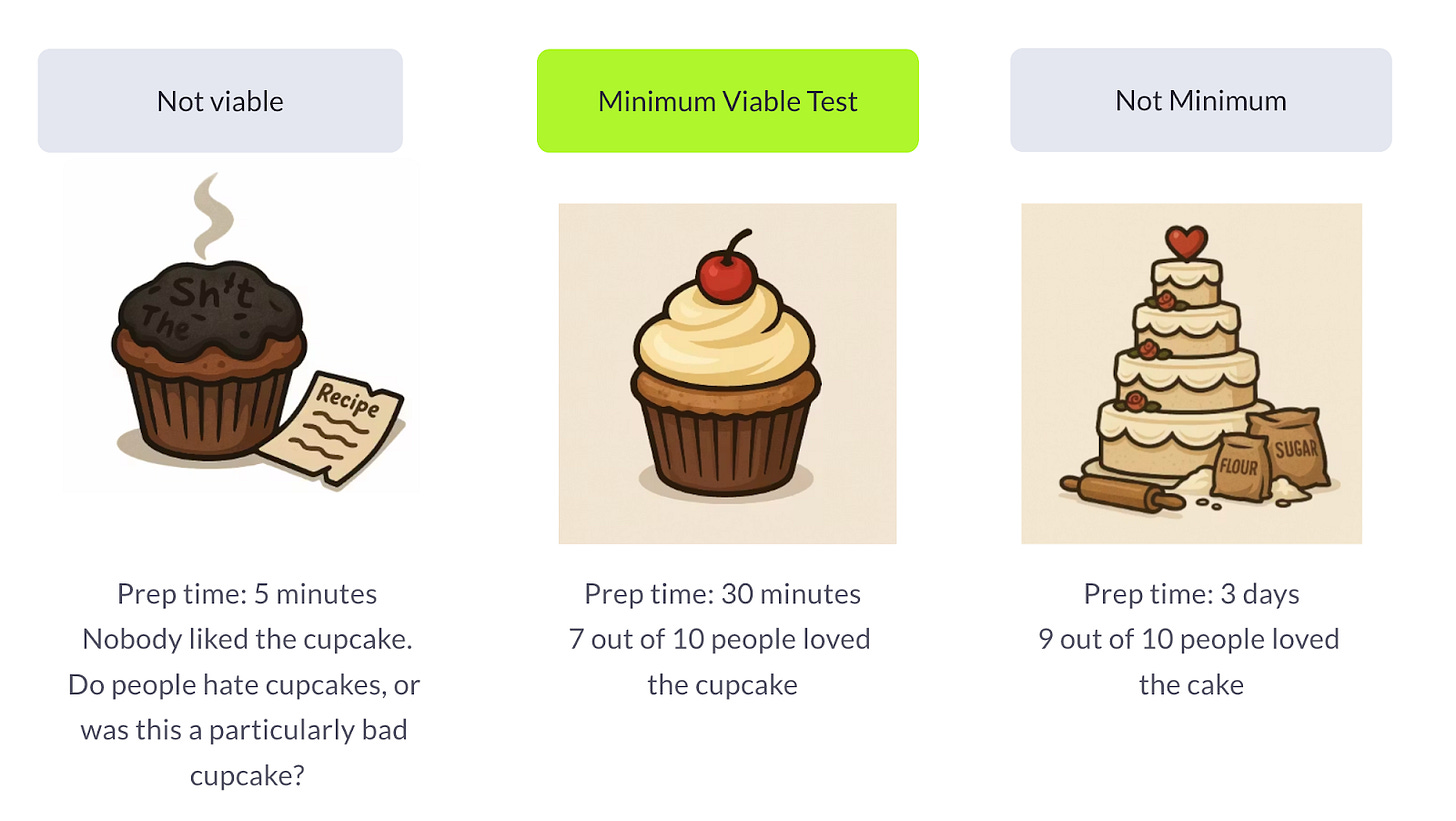

Think of it as baking a single cupcake to test a new recipe before preparing a multi-tiered wedding cake.

The trick, though, is hitting that "viable" mark. Go too minimal, and your test results become useless. Imagine running a fake door marketing test with a sloppy landing page. If you fail to meet your success metrics, was it because your value proposition didn't resonate, or because your landing page looked like sh*t? You need just enough quality to ensure that any failure (or success) can be confidently attributed to your idea, not the shoddy test setup.

Testing multiple assumptions per test

I almost always test multiple assumptions per test, a practice I find incredibly efficient.

What’s the risk?

The core principle behind testing assumptions, rather than fully fleshed-out ideas, is to enable smaller, cheaper, and faster tests. This approach also allows us to isolate the "why" behind user behavior.

If you can mitigate the risk, testing multiple assumptions per test is fine. If you can keep your tests lean and effectively separate each assumption within the test, tackling multiple assumptions at once becomes a powerful way to accelerate learning.

Now, don't burn me at the stake here, but in some specific cases, I even test multiple ideas within a single test. This is only when I'm highly confident I can dissect a test participant's reaction to pinpoint exactly which individual assumption or idea drove their response.

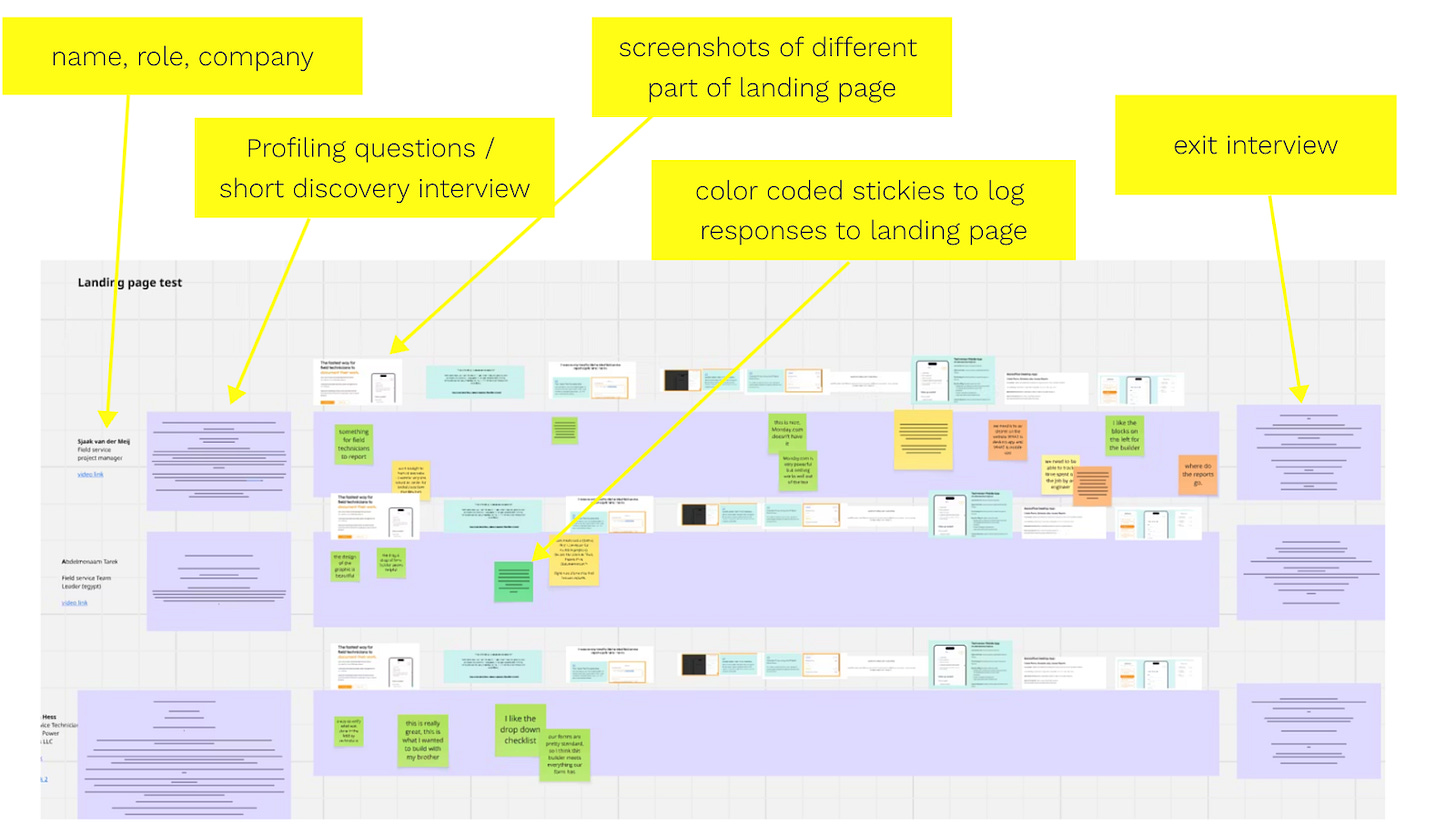

This is precisely why I'm a huge fan of 1:1 landing page tests. With these, I can present various benefits, features, and even product videos or GIFs, asking the viewer to narrate their exact thoughts on each element. It's like having a real-time "wow" vs. "meh" meter for every point. When I have participants compare two different landing pages, I can even test distinct value propositions or explore entirely different pain points.

For example, the current Vera Field website combines several ideas related to the core customer opportunity of faster mobile service note capture (Custom forms, predefined answer blocks, photo annotation, offline first, etc.).

The same principle applies to prototype tests. These allow me to quickly validate crucial aspects such as:

Do users care about the overall idea?

Can they easily locate important interactive elements?

Are they inclined to click those elements?

Do they understand the intended function of the feature?

Does the feature deliver on their expectations?

Tying together assumptions, success metrics, exit interview questions

Each assumption you test with this test should have a success metric and an exit interview question associated with it.

Example assumption

“The value proposition of the "exact right forms" idea is strong to excite the ICP”

Success metric:

7 out of 10 test participants say this solution idea would be significantly better than the alternative they’re using today

Exit interview question:

“Do you believe this tool would be significantly better than how you are doing things today?”

Image: logging test participant responses to the website + questions on a Landing Page Test Miro board.

Multiple tests per assumption

Layering your tests, or performing multiple tests to validate the same assumption, is a crucial strategy, especially when working with qualitative methods and smaller participant pools.

I love qualitative tests because they are so rich in insights, and let’s face it - in an early stage startup qualitative tests are often all we’ve got. The big downside: They carry a high risk of false positives or negatives due to their limited sample size. By testing the same assumption through various methods - for example, combining in-depth interviews with a lightweight prototype test and then a broader landing page test - you build a more robust body of evidence.

Extracting insights

Extracting actionable insights from my tests is a disciplined, multi-step process.

Tallying for Pass/Fail: First, I rigorously assess whether each test passed or failed. I go through the results (which I often log on Miro) and review exit interviews to count my success metrics. For example, I'll tally how many participants said ‘yes’ to my question “would this solution be significantly better than how you do things today?”

Identifying Real Insights: Next, I focus on pulling out only the key learnings. This means ruthlessly cutting fluff, and focusing only on what’s important and surprising/new. "A lot of people liked it" is not an insight. Instead, For me, some concrete new insights were: "The German market might be saturated due to players like PDS and Taifun," or "Possibly, Field Service Managers are not the 'champions'; I should talk directly to owners."

Weighting the Evidence: Make sure to assign appropriate weight to each piece of evidence. When we’re dealing with qualitative tests asking we’re capturing hypothetical behavior, which often doesn’t line up with actual behaviour. I treat negative feedback with more gravity than positive responses (e.g., a "no" to willingness to pay is taken much more seriously than a "yes"). I prod deeper than surface-level responses to understand what participants are really thinking. Listening for genuine emotion is a big part of this (something LLMs can't do!). I look for:

Excitement and Specificity: "This would save me 2 hours/week" or "I love this clean UI."

Signs of Real-World Pull: "When can I use this?" is far better than "I think I could use this."

Unprompted Recognition of the Pain: If the problem surfaces naturally within the first 15 minutes of conversation, it’s likely to be real (although I often like to follow up with a “how important is that” to be more sure). At the end of each session, I take a moment to order my notes and thoughts, critically assessing if the person was honest or just trying to humor me.

Making Decisions: I understand the urge to immediately change the landing page or prototype/MVP as soon as I get feedback. Fight that urge. Only make change decisions after I've seen clear patterns and compelling evidence emerge across multiple tests.

Conclusion

A strong test strategy isn't just about de-risking ideas; it's more like a compass guiding your next steps. You'll never have absolute certainty, and that's perfectly fine, since most product decisions can be easily reversed. When uncertainty is high, the likelihood of needing to reverse course is also high, which is precisely why keeping your upfront investment low is so important.

I've found that what truly works is:

Selecting the Minimum Viable Test that's best suited for the specific assumptions I'm testing.

Diligently extracting key learnings from every interaction (and that means active reflection on my part, not just assuming my AI note-taker has captured everything - it never has).

Embracing a layered testing approach.

I'm comfortable testing multiple assumptions, or even different ideas, within a single test, provided I can confidently isolate the "why" behind user reactions.

Finally, resist the urge to immediately adapt your test material (like a landing page, prototype, or MVP) after receiving the first piece of feedback. Instead, critically analyze the evidence and let clear patterns emerge before making your next move.

This disciplined approach ensures your efforts drive real impact, rather than just busywork.

I’m publishing my test documentation on Notion.

For each test I document the Outline; Assumptions; Success metrics; Results.

For the landing page & prototype tests I also included information on how the pages and prototypes were built (tooling & step by step process)

So far I documented:

Pitch test at a conference

Single landing page test

Double landing page test

Prototype test / user lab

Marketing fake door test