Weeks 8 & 9 - Ideation & Riskiest Assumptions Mapping

Theory & Video Walk-Throughs Of My Ideation Board & Riskiest Assumptions Map

For the past nine weeks, I've been building a B2B startup from scratch, with AI as my (sometimes unreliable) sparring partner. My goals are simple: share real-world product management applications and everything I learn about AI along the way. My goal is to have 5 Letters of Intent signed within 13 weeks.

Missed the start?

My goals and principles: Building a Bootstrapped B2B Product with Gen AI (0 to 1)

Week 1: Hunting for My Underserved Niche ICP with AI & real-world signal (ICP smoke test)

Week 3&4: Zeroing In On My ICP With Interviews & Prototype Tests

Week 5: The User Lab. A Guide to Integrating Interviews, Landing Pages, and Prototypes

Big news: Peppe Silletti turns my one-man-band into a two-man-band

Meet Peppe. He's a product engineer with a decade of experience, including six years in product. When he’s not digging into user needs, he’s busy as a community lead and organising meetups. We connected on LinkedIn and became fast friends over our shared philosophy on building products.

Over the past weeks I realized two big things:

Alone is very alone, and LLM’s can’t replace humans. Sure, they’re decent sparring partners, but they're still glorified word-association machines that like to reinforce the beliefs we already have (hello bias). I've explored their limitations before, but the bottom line is they lack true understanding, root-cause analysis, and the ability to adapt to new learnings. They can only rehash existing ideas. A real human, especially one with Peppe's insights, outpaces the machine by far.

"Vibe-coding" has its limits for a non-technical product person like myself. While I can whip up a decent prototype in an hour, that's usually where my solo building journey stops. I could pour days into perfecting it, but I'd never unleash it into the wild. As behavioral mathematician Barbara Lampl wisely noted, LLMs should amplify your strengths, not mask your weaknesses. If you can't assess "good," don't use it to cover that gap.

Peppe, thanks for joining me in this adventure!

Ideating solution ideas for the #1 customer opportunity

Our #1 Customer Opportunity: Keeping it Simple for Field Techs

In weeks 6 & 7, we zeroed in on our top customer opportunity:

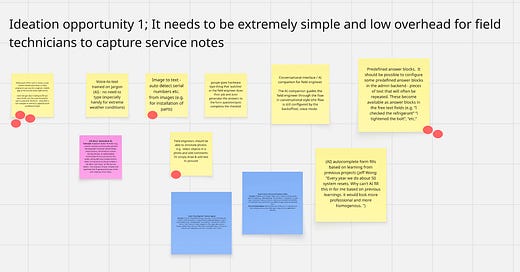

"It needs to be extremely simple and low overhead for field technicians to capture service notes."

Am I 100% sure this is the problem, a "hair-on-fire" issue with no good existing solutions for small field service teams?

Nope. But that's okay. I'm ready to be proven wrong.

Ideating Solution Ideas: My Process

Tools

Miro

Gemini Flash (my current go-to model) - with a custom Gem.

Human-Powered Ideation: The Basics

Generate Volume, Then Narrow: The goal is to brainstorm a lot of ideas. Think super-simple to wildly complex, familiar to "cray-cray." Keep pushing yourself to ideate solo until it hurts.

Why? Why? The first idea is rarely the best. As product leaders like Teresa Torres emphasize (and research confirms), a 'one-and-done' approach stifles creativity and prevents true innovation. This is precisely what happens when we jump to implementing user-requested features without first understanding the underlying problem.

Start Solo, Then Combine: Start Solo, Then Combine: Even with a team, I always recommend individual ideation first. As Teresa Torres rightly points out, brainstorming together can lead to groupthink, limiting the diversity of ideas.

My typical ideation flow:

Workshop: Select the #1 customer opportunity (together) and ensure everyone has a shared understanding of what it means.

Solo Work: Everyone (ideally a diverse group!) blocks at least two hours for asynchronous ideation.

Workshop: Cluster solution ideas. One person presents, others raise hands for similar concepts. Even with overlap, keep ideas distinct if they’re not exactly the same.

I completed my ideation process before Peppe joined the team. I copied our #1 opportunity from the Opportunity Solution Tree and filled virtual stickies with distinct, small solution ideas I believe tackle this pain point.

Example: Small, Distinct Ideas

Wrong: "The fastest way for technicians to capture service notes: with custom form builder, conditional logic, picture-to-text, photo annotation, pre-set answer blocks" (This is a full solution, not a single idea.)

Right:

Custom form builder with conditional logic

Picture to text

Photo annotation

Pre-set answer blocks

LLM-Powered Ideation: Leveraging Custom Gems/GPTs

I built a Custom Gem (I’m a Gemini fan, custom Gems are similar to customGPTs) loaded with all the context I've gathered over the past few weeks:

My core beliefs about my Ideal Customer Profile (ICP)

Insights on their Jobs to Be Done (JTBD) and customer opportunities

Additional constraints and desires, like the principles from "Building a Bootstrapped B2B Product with Gen AI (0 to 1)," my focus on "hair-on-fire" problems solvable with simple software, and a preference for point solutions over full suites

For each belief, I included the supporting evidence and specified my confidence level in each assumption to the LLM.

In my ideation thread, I added more specific context:

Details about my #1 opportunity: why I believe it's a "hair-on-fire" problem, backed by exact customer quotes and insights

My own generated solution ideas

I guided the LLM through three rounds of idea generation:

Out-of-the-box, imaginative concepts

Low-cost ideas that still resonate with my target audience

Ideas informed by competitor analysis

Find my exact prompts here under the header “Ideation: Ideating solutions with Gemini”

Watch the video below for a walkthrough of my ideation Miro board.

Selecting the top 3 ideas to test

Why test 3 ideas instead of just 1?

Compare and Contrast for Better Decisions: As Teresa Torres emphasizes in "Continuous Discovery Habits" (I read other books too, I swear!), compare-and-contrast decisions are far more reliable than simple "do vs. don't" choices. Judging if an idea is "good" is relative. It's much easier when you have something to compare it against. Am I a good product manager? Who knows. But I'm definitely better than my four-year-old (though we could debate that).

Gauge True Excitement: Testing multiple solution ideas with the same test participant lets me see the breadth of their excitement levels. As I wrote in my article “Week 5: The User Lab. A Guide to Integrating Interviews, Landing Pages, and Prototypes”: "Was the 'WOW' a genuine expression of excitement, or merely polite enthusiasm because I was watching over their shoulder? This requires a strong dose of gut feeling and brutal honesty. Presenting multiple prototypes to a single participant can help gauge the breadth of their excitement levels more accurately."

Idea Selection Criteria

Instead of jumping straight to ICE scoring, I focused on the variables that truly matter to me when selecting winning solution ideas:

Quick & Easy to Build (MVP): Simplicity first.

Easy for ICP to Understand: Ideas must be extremely clear to my Ideal Customer Profile (field service managers at small companies with fewer than 20 technicians).

Willingness to Pay: The ICP should be willing to pay at least $100/month for the solution.

The ‘right’ decision depends on which variables matter most to you for this specific decision.

If you don’t make those variables explicit, and fail to choose the variables that matter most (focus), you’ll struggle to make the right decision.

Keeping these variables in mind, I selected the following three ideas for testing:

Custom Forms/Checklists for Technicians: Allow back-office staff to easily create custom forms and checklists that engineers can fill out directly in their mobile app. (Interviews revealed many Field Service Management suites hide full form customization in higher pricing tiers).

Predefined Answer Blocks: Enable configuration of frequently repeated text snippets in the admin backend. These would then be available as quick answer blocks in free-text fields (e.g., "I checked the refrigerant," "I tightened the bolt," etc.).

Photo Annotation for Field Engineers: Allow field engineers to annotate photos by selecting objects and adding comments, or simply drawing and adding text directly onto pictures.

Assumptions mapping

Before I jump into any test strategy – or worse, start building a solution – I always map my assumptions. This pinpoints my riskiest assumptions, leading to a precise test strategy.

What is Riskiest Assumptions Mapping?

Popularized by David Bland in "Testing Business Ideas" (co-authored with Alex Osterwalder), Riskiest Assumptions Mapping is a strategic exercise that helps teams:

Identify all underlying beliefs driving a product or service.

Prioritize them on a matrix (usually "importance" vs. "evidence," or "risk" vs. "uncertainty").

Pinpoint "riskiest assumptions": those crucial to success but with the least supporting evidence.

Focus early, targeted experiments on these assumptions to quickly validate or invalidate them, minimizing the risk of building on flawed foundations.

Why Test Assumptions, Not Full Solutions?

My goal is for tests to be as cheap and lightweight as possible. By testing the riskiest hypotheses underpinning my ideas, I can:

Create much smaller tests. Testing the single assumption: “We can make it easy enough for back office teams to create a mobile form that reflects their current workflow” doesn’t require launching a full product. Instead, we can run a 1:1 user lab with a click dummy version of the form-builder.

"Wipe out' multiple ideas at once. I often find the same riskiest assumption underpins several ideas. Falsifying that single assumption can 'kill' multiple ideas simultaneously, saving significant time.

As I detailed in "Your MVP didn’t fail; you didn’t set it up to teach you anything," testing an entire solution makes it nearly impossible to pinpoint why it failed if it doesn't work. It's far more effective to explicitly list all underlying assumptions, select the riskiest ones, and test those specifically.

Video walk-through Riskiest Assumptions Map

In this video, I'll walk you through my riskiest assumptions map and explain why failing to isolate these assumptions in tests can lead to trouble.

The Cost of Skipping Assumption Mapping: Two Scenarios

Scenario 1: Launching an MVP Without Assumption Mapping

Imagine I skip mapping my assumptions and jump straight into building and launching an MVP. If this MVP "fails” - meaning it doesn't hit my target adoption or retention metrics - I'll be left completely in the dark.

Was the value proposition weak?

Does the problem even exist?

Did the back-office staff struggle to use it?

Or was it the field engineers who couldn't use the app?

I can't pinpoint the exact failure point.

Scenario 2: The Power of Riskiest Assumptions Mapping

Now, let's look at Scenario 2, where I've meticulously laid out my riskiest assumptions.

For the idea "Allow back office staff to easily create custom forms/checklists, so that field engineers can use the engineer mobile app to fill out the exact right forms," some key assumptions include:

Desirability: The value proposition of "exact right forms" is compelling enough to drive adoption and willingness to pay among the ICP.

Desirability: Current, failing service documentation processes are a severe, recurring "hair-on-fire" pain point.

Viability: We can find a scalable, repeatable customer acquisition channel.

Usability: Field engineers will easily and quickly use the forms created with Vera Field to capture notes.

Usability: Back-office teams (non-technical managers) can easily create mobile forms that mirror their current paper forms.

For each assumption, I ask: "What's the best (cheapest and most lightweight) test that can tell me if this is true or false?"

Take the "value proposition" assumption, for example. I could test it by launching an MVP and tracking sign-ups and retention. But I'll learn more about user thinking from a qualitative landing page test. Qualitative 1:1 tests provide deep insights into how people think, while quantitative tests show actual behavior in real-world settings. You need both to complete the puzzle.

Your Riskiest Assumptions Map: A Living Document

Your riskiest assumptions map isn't a one-time exercise; it's a living document that constantly evolves and informs your test strategy. As you validate or falsify assumptions, you'll move them across your map.

Continuously mapping out your assumptions helps you:

Uncover Logical Gaps: Identify blind spots in your thinking. Color-coding assumptions by category (desirability, viability, feasibility, usability) helps immensely here.

Ensure Team Alignment: Check if your team is on the same page about the solution idea (after everyone's done their solo work, of course!).

Be Honest About Evidence: Assess your confidence in each assumption based on the evidence collected. Is the evidence strong enough?

Prioritize Next Steps: Know which assumption to tackle next and choose the most minimum viable test to run.

Output of the last 2 weeks

Executed 5 more interviews (outreach to call conversion rate improved drastically with the personalized video outreach idea. I record each video manually - no AI here!) Still exploring other channels.

Can you make an intro to small Field Services Teams (fewer than 20 engineers) (e.g. HVAC, Solar installation/servicing)? Do you have experience with this target audience that you’d be willing to share? Find me on LinkedIn.Chose the name Vera Field, Peppe vibe-coded an MVP for the form-builder with mobile preview, and I pulled up a website to use for our moderated user tests

(Feedback very welcome <3. Keep in mind, we won’t be directing traffic to our website or MVP yet, these will only be used in 1:1 test sessions)

Next Week: Running Our First Tests

Next week, we'll be diving into our initial round of tests:

Pitch test (I’ll be going to my first in-person conference in Berlin)

Qualitative landing page test (user lab)

Prototype test (user lab)