Week 3&4: Zeroing In On My ICP With Interviews & Prototype Tests

Practical Guide to Participant Recruitment, Interviews, Prototype Tests, Extracting and Mapping Insights & Opportunities (OST)

Missed the start?

My goals and principles: Building a Bootstrapped B2B Product with Gen AI (0 to 1)

Week 1: Hunting for My Underserved Niche ICP with AI & real-world signal (ICP smoke test)

TLDR;

TLDR; Weeks 3 & 4 weeks focused on defining an initial ICP, attempting AI quote scraping, recruiting participants, conducting interviews and prototype tests, extracting insights and opportunities, and beginning Opportunity Solution Tree (OST) mapping.

Output:

Three ICP interviews, three expert interviews

Insights:

Positives:

Strong pain signals around service documentation and a seemingly favorable competitive space.

Refined ICP description

High-level idea for a simple service documentation solution for small teams (2-20 field engineers)

Negatives:

Low LinkedIn interview conversion

No insights on willingness to pay.

First Iteration ICP Definition (before interviews)

Defining Your Initial ICP: Navigating the Early Discovery Tightrope

The Problem: Without a clearly defined ICP, my chances of pattern detection are low. But lack of evidence makes ICP definition tricky

On one hand, a focused ICP is essential. It concentrates your research efforts and significantly boosts the odds of spotting meaningful patterns. For instance, interviewing Field Service Managers from teams of all sizes – say, from 2 to 2,000 engineers – would likely reveal such divergent jobs-to-be-done, unmet needs, and existing alternatives that uncovering consistent patterns becomes nearly impossible.

However, in these early stages, a lack of hard evidence makes it tricky to confidently narrow your focus. The last thing we want is to invent constraints out of thin air.

The Solution: Draft your first ICP based on informed assumptions

Here’s how to approach it:

Do: Formulate your initial ICP assumptions based on your desktop research or your own knowledge. Critically assess these assumptions: Does this attribute genuinely seem important? Does this focus feel right based on what you've learned so far?

Don’t: Artificially restrict your ICP with attributes that don't yet make sense, simply for the sake of having a narrow definition.

My Rule of Thumb:

I typically dedicate one to two months to intensive discovery with this initial ICP. After this period, I pause and take stock: Do I stick with this ICP, narrow it further, or is a pivot necessary based on what I've learned?

What Doesn't Work:

Failing to narrow down your ICP at all before starting research. This is a recipe for diluted efforts and inconclusive findings.

Trying to test with two or more distinct ICPs simultaneously. You'll inevitably spread your resources and attention too thin, hindering your ability to go deep with any single group.

My first ICP (before interviews)

My focus on Field Service Management is based on key risky assumptions:

A Favorable Competitive Landscape: My analysis indicates most of my target ICP rely on manual processes or non-specialized tools (Google Docs, PowerPoint). While familiar with comprehensive FSM software, they find it too complex and costly. Specialized tools for smaller teams exist, but market awareness is low and no clear leader has emerged.

Low Software Penetration in My Target Segment: The predominance of manual tools suggests these teams may not be familiar with "decent" software, potentially lowering the bar for a valuable MVP.

A Relatively Accessible ICP: An initial LinkedIn "smoke test" (3 interviews from 50 cold outreaches) provided an early positive, albeit small, indicator.

AI Powered Quote Scraping

Scraping customer quotes using Gemini 2.5 Pro proved less useful than anticipated. The primary issues were the inability to verify if quotes came from individuals within my ICP and the lack of context, making interpretation difficult. Exploratory interviews remain the gold standard.

Find my conversation-starter prompts on my Notion page, under “Voice of Customer Research”.

Recruiting Interviewees Who Fit My ICP: Strategies and Channels

1. LinkedIn Sales Navigator (€99,99/month) + LinkedRadar ($19,90/month)

I experimented with different outreach messages:

Generic: "Hi, I’m interviewing Field Service Managers with teams of 2-20 field engineers to learn more about friction points in their day-to-day work. I can imagine that tasks like scheduling incoming jobs; handing customer communication; or incomplete or missing service reports can cause headaches. Would you be open to sharing your insights during a short call?”

JTBD/Pain Point Specific (Higher Conversion & Easier Pattern Detection): "Hi, I’m interviewing Field Service Managers with teams of 2-20 field engineers to learn about service documentation processes. My understanding is that at most companies, field engineers fill out forms or checklists on their mobile phones on site (google docs, powerpoints, etc.) and send these via email to the back office. Sometimes service reports get lost, or pictures are missing, leading to issues with customer communication. I’d love to learn about how this works at your company. Would you be open to jumping on a call to share your insights? (It’s not a sales pitch!)”

Prototype Test Invitation (Assumption: Higher engagement than interview request): "Hi, I’ve built a software tool to make it extremely easy for field engineers to capture service notes on the job site. From conversations with several Field Service companies I learned that most Field Engineers hate service documentation. I’m convinced there’s an easier way. I’d love for you to try it out and give your feedback, would you be open to jumping on a call?”

LinkedIn Results (First Two Weeks of Campaign): 3 interviews with Field Service Managers fitting ICP, plus 3 expert/influencer interviews (identified via ChatGPT).

2. Userinterviews.com

Userinterviews.com is my go-to platform for sourcing interview participants. It has consistently delivered high-quality B2B candidates across a variety of niche ICPs.

Key advantages include:

Powerful Filtering: Allows for precise targeting of participants.

Screener Surveys: These are particularly valuable. They enable you to capture initial customer quotes and insights "for free" even before selecting individuals for full interviews.

I copy paste the screener survey responses into a file and use Gemini 2.5 Pro to analyze.

Of course, it’s important not to abuse the free screener survey. I make sure that I always schedule (paid) interviews with fitting applicants.

Discovery Interviews

Conducting the Interview

My interview structure:

Profiling Questions (First 5 Minutes): To segment interviewees (e.g., team size, current documentation stack, multiple branches).

Exploratory Interview (20 Minutes): I avoid rigid scripts, aiming to uncover interviewee stories and pain points, guiding them towards interesting areas. You can find my interview cheat sheet here. A crucial point, #7 on my cheat sheet, is the art of choosing (and iterating on) your opening question. For example, asking "Tell me about the last time something went wrong when a service engineer had to perform a service for a customer" will elicit a very different narrative than "Tell me about how your team handles service documentation."

My interview questions evolve, sometimes on a weekly basis. This evolution is driven by the specific pain point, unmet need, or emerging customer opportunity that I consider most promising at that particular stage of discovery.Prototype Test (20 Minutes): As I detailed in my earlier article, "How Vibe Coding has Impacted My Discovery Process" I'm introducing prototype tests much earlier in the discovery journey thanks to tools like Replit and Lovable. I use rapidly developed non-functional click dummies to gauge excitement early. For this round, I tested:

A simple mobile service documentation form (desired by three initial interviewees).

A customer service request form (desired by one initial interviewees).

A back office desktop app for scheduling incoming jobs

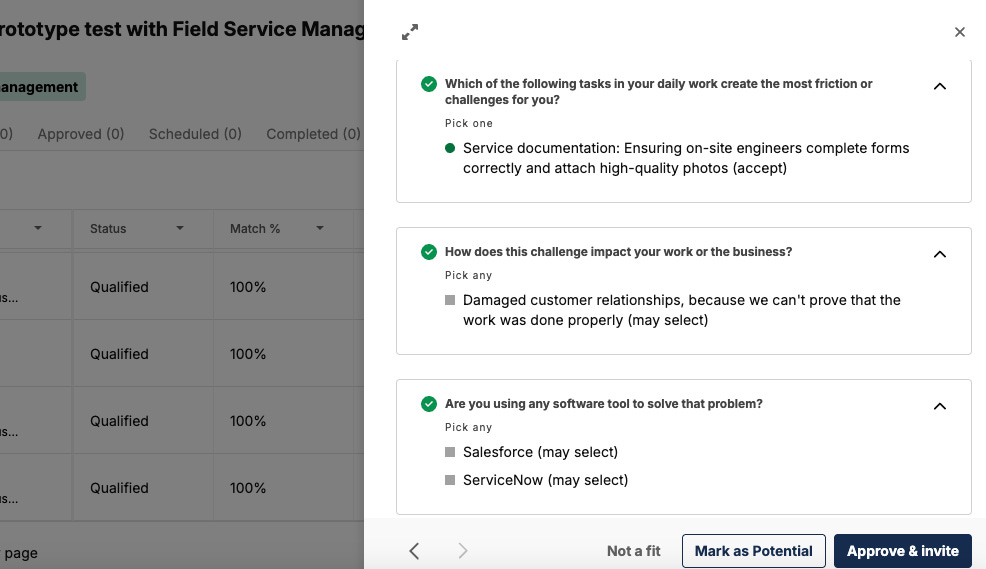

Initial Result (n=2): Significant excitement, suggesting a potentially low bar for a delightful MVP.

In my next article I will outline how I prepare the prototype test, collect feedback, and extract insights.

Collecting Artefacts

During the first interviews the process of service documentation was highlighted several times as being most painful, so I decided to zero in here.

I asked each interviewee to show me the form(s) their field engineers are supposed to fill out. Collecting all these forms will help me understand the best way to translate existing processes to a mobile app.

Extracting Insights & Opportunities from Interviews

Tools

Fathom AI free tier. Makes recording and takes decent notes with hyperlinks to the relevant sections of the video. Can distinguish between multiple participants.

Gemini 2.5 pro to extract insights and opportunities from interview transcripts. Find my prompt under “Extracting insights and opportunities from interview transcripts” on the dedicated Notion page.

Observation: Even with detailed instruction, Gemini still sneaks in the occasional solution request or business opportunity. As Teresa Torres commented, both humans and LLMs mix up opportunities with solutions, miss important stuff, and jump to faulty conclusions. “I think we can probably teach AI to identify opportunities. But I don't think it’s going to be easy”.

Hand-Written Notes & Interview snapshots

While AI tools offer assistance, the notes I personally take during an interview remain the most reliable source of insight. I can capture nuances of emotion and context that AI simply can't detect.

More importantly, the act of taking notes significantly aids my own information processing. Ultimately, manually assessing interviews is where my deepest learning occurs.

At the end of the day, I'd rather train myself, not the model.

Following each interview, I convert my handwritten notes into "Interview Snapshots," (a la Teresa Torres).

Opportunity Solution Tree with first results

I've created two OSTs (one for ICP interviews, one for experts) to avoid mixing insights. Currently, opportunities are listed horizontally, with plans to add depth as more insights are collected.

Screenshot OST ICP (Vistaly)

Research insights

The Good: Strong Problem Signal Around Service Documentation

A consistent theme emerged across all three Field Service Manager interviews: significant struggles with service documentation.

Current Process Inefficiencies: Their field engineers are typically using Google Docs or PDFs on mobile devices to capture service information, which is then emailed to the back office. Photos are often sent separately. This forces Field Service Managers to spend hours manually compiling and storing these reports.

Common Pain Points: Missing or incomplete reports, resulting in customer chargebacks.

Tooling Awareness: While familiar with comprehensive solutions like Salesforce, these managers perceive them as overly complex ("too heavy-handed") for their needs. Notably, they were largely unaware of the smaller, dedicated Field Service Management (FSM) tools available.

The three industry experts I consulted echoed these sentiments. They emphasized that existing service documentation forms often lack sufficient customizability (at least without expert intervention), historical search functionality is poor, offline connectivity remains a challenge, and boosting field engineer efficiency is a top priority.

The bad: LinkedIn Outreach Conversion Challenges

After having sent out appr. 150 InMail/Connection requests, I only conducted three interviews with Field Service Managers fitting my ICP via LinkedIn. Could be the channel, but could be the audience.

Second iteration ICP definition (after first interviews)

Upcoming weeks

Prototype tests/user lab. Preparing the test, collecting feedback, and extracting insights.

Ideating solution ideas

Riskiest assumptions mapping