Week 5: The User Lab. A Guide to Integrating Interviews, Landing Pages, and Prototypes

Identify insights by understanding how expectations shift between different testing components

TLDR;

Session Structure: 45-60 min 1:1 sessions

Three different paths to choose from:

Interview → 1 Landing page test → 1 Prototype test

Interview → multiple landing page tests

Interview → multiple prototype tests

Three parts:

Exploratory Interview: Uncover pain points ("hair on fire" problems)

Moderated Landing Page Test: Validate messaging, value prop, audience fit (vibe-coded)

Moderated Prototype Test: Gauge excitement for solutions (‘vibe coded’, usually non-functional)

Learnings don’t only come from the parts in isolation, but the transition between parts.

Interview → Landing page/Prototype. Looking for ‘unexpected’ reactions, such as:

No stated pain but high prototype excitement. Possible latent needs

Excitement about a prototype/value prop. that doesn’t match the pain/solution they outlined in the interview. Possible visionary idea.

Strong pain, but low excitement for its solution = opportunity to pivot solution or identify better fit

Landing Page → Prototype:

Does prototype deliver on landing page promise? Mismatch = retention killer

Benchmarking excitement: Use multiple prototypes/landing pages to compare and gauge "breadth" of participant excitement levels

Content

Introduction

People often ask how I go from zero to a validated B2B solution idea. I’ve noticed that abstract theory is way more powerful when combined with real-world examples.

In this article series, I’m trying to build a bootstrapped B2B business, and I’m walking you through my process step by step.

Missed the start?

My goals and principles: Building a Bootstrapped B2B Product with Gen AI (0 to 1)

Week 1: Hunting for My Underserved Niche ICP with AI & real-world signal (ICP smoke test)

Week 3&4: Zeroing In On My ICP With Interviews & Prototype Tests

This week, I’ll outline how I run my user lab, from prep to insights.

1. Preparing the user lab: Laying the groundwork

If you don’t think through your test in advance, you won’t learn from it.

Hypothesis formulation: What are the riskiest assumptions I’m trying to validate with this test?

It’s fine to validate multiple assumptions with one test, as long as you don’t fall into the trap of trying to validate an entire solution idea with one test. I make sure to document every distinct assumption I am testing, and tie my success metrics back to these individual assumptions.

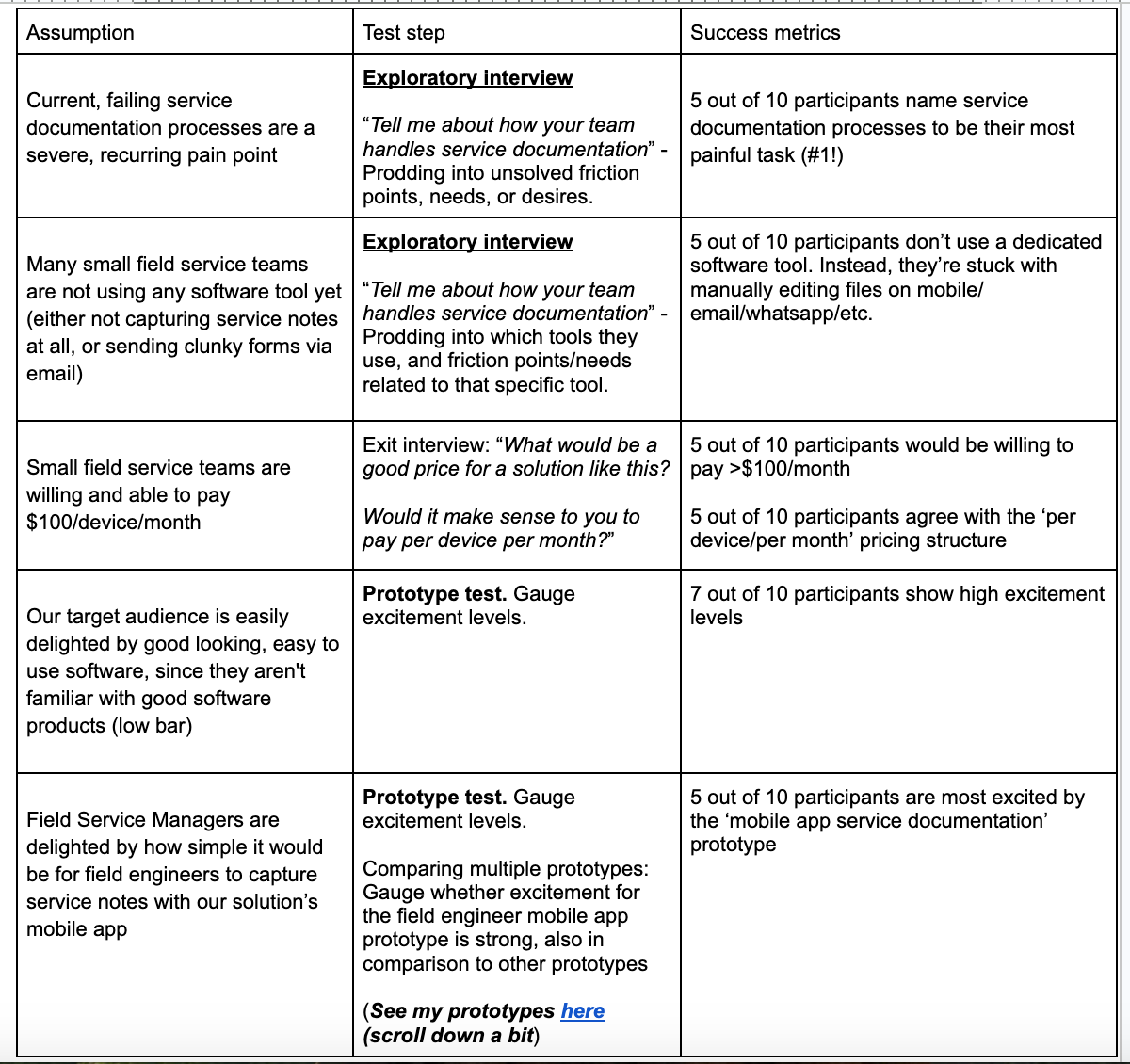

My user lab tested the following riskiest assumptions:

Current, failing service documentation processes are a severe, recurring pain point (hair on fire)

Many small field service teams are not using any software tool yet (either not capturing service notes at all, or sending clunky forms via email)

Small field service teams are willing and able to pay $100/device/month

Our target audience is easily delighted by good looking, easy to use software, since they aren't familiar with good software products (low bar)

Field Service Managers are delighted by how simple it would be for field engineers to capture service notes with our solution’s mobile app

Target Audience / ICP: Who is invited to participate?

Who you invite to your user lab hinges on two factors: your Ideal Customer Profile (ICP) and your specific learning objectives. For instance, if you're testing a new user onboarding journey, inviting non-users who have never encountered your product is best. Conversely, for an upsell module designed for existing customers, those loyal users are your ideal participants.

I’m bullish on only inviting participants who fit your ICP. You want to collect signal, not noise.

I'm targeting Field Service Managers who lead teams of 2-20 field engineers and work for an Original Equipment Manufacturer (OEM). Since I don't have existing users, I'm recruiting non-users who fit this ICP.

Test outline

What will the test look like? Which steps will it entail? Find my test outline below, under Executing the User Lab.

Success metrics: How will I know whether it’s true or false?

While initial exploratory research isn't about definitive validation, I establish clear indicators of interest. These success metrics are directly tied back to the underlying assumptions being tested. For these qualitative sessions (typically involving 10-20 participants) my success metrics are articulated in absolute values. For instance, stating '7 out of 10 testers showed high excitement levels' provides more detail than a generalized '70% of testers showed high excitement levels.’

2. Recruiting real-world participants

I typically aim for 10-20 test participants. I’ve been recruiting participants via LinkedIn cold outreach (Sales Navigator) and userinterviews.com. More on this in last week’s article.

3. Preparing the vibe-coded prototype: Bringing ideas to life (lightly)

Check out my previous article “How Vibe Coding has Impacted My Discovery Process” to learn more.

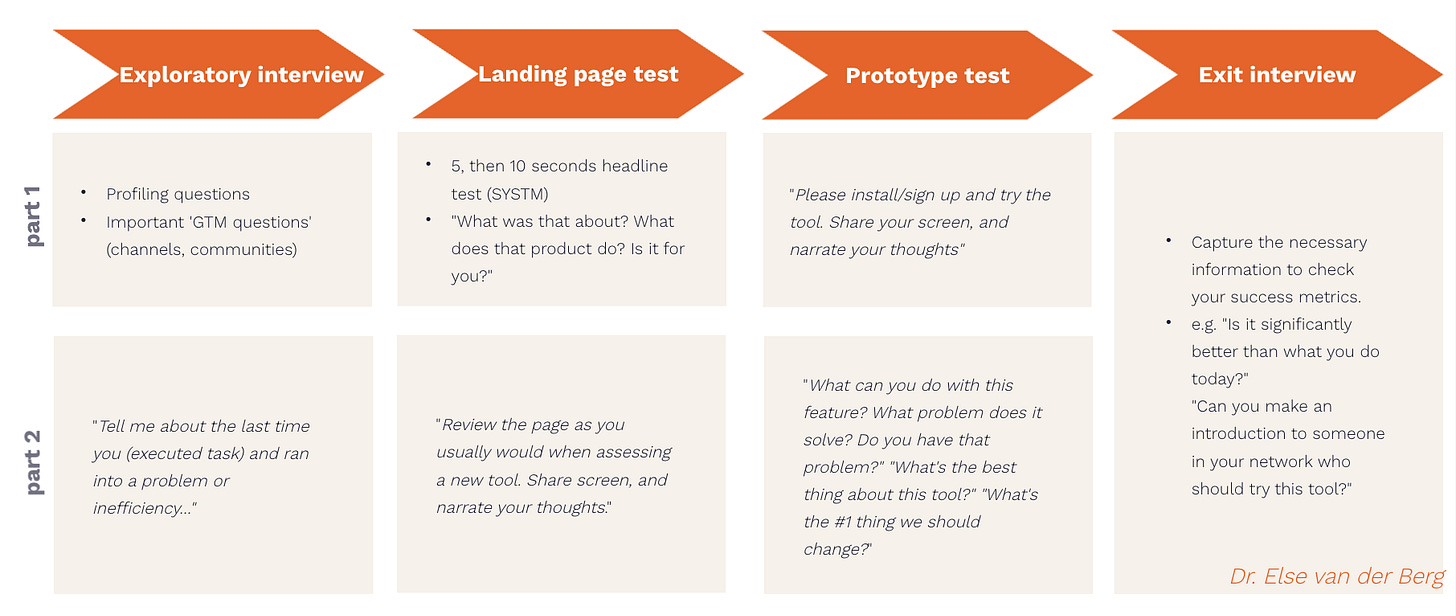

4. Executing the userlab

My userlab follows one of three different paths:

Let’s break the flows up into their separate parts:

The exploratory interview

All paths start with an exploratory interview, which I structure as follows:

Short profiling questions: Segmenting for deeper understanding (5 minutes)

I start with gathering the attributes which I believe might turn out to be meaningful when segmenting my audience. This will help me sharpen my ICP over time.

In my case:

Role

# of field engineers

OEM or independent service provider?

Current tech stack

Short questions to inform my GTM strategy (1 minute)

Examples: "Which forums or communities do you frequent for professional insights?" or "How do you stay on top of news about field service management?"

This will help me identify new channels for outreach/marketing.

The exploratory interview: Pressing for pain points (15 minutes)

This segment is dedicated to understanding their current workflows and identifying unsolved pain points. I asked my participants to tell me about how their team handles service documentation, detailing any unsolved friction points or needs they face.

Check out my previous article “Zeroing In On My ICP With Interviews & Prototype Tests” for theory on interviews.

After the exploratory interview, I follow one of three paths:

Path 1: Interview → 1 landing page test → 1 prototype test → Exit interview.

New users rarely encounter your product without prior exposure. Their expectations are often set by your landing page, marketing materials, or sales interactions. It's crucial to determine if these initial perceptions match the actual product experience.

Testing the landing page / marketing message

I start my user lab sessions by showing participants the landing page to mimic a real-world entry point.

How I run this test

This involves two distinct phases:

Headline clarity check (5 seconds) (borrowed from Systm): I share my screen, display the landing page, and after a brief five-second glance, switch to a blank tab. I then ask, 'What did you just see? What was the product about?' This quickly reveals if the headline effectively communicates the core offering. Expect a lot of “I wasn’t paying attention…”

Self-directed exploration (approx. 15 min): After the headline test, participants receive the URL and share their screen. I instruct them to review the landing page as they typically would when considering new software, narrating their thoughts. This uncovers natural user behavior and provides opportunities for follow-up questions.

What we validate:

A landing page test helps confirm:

The headline's effectiveness in grabbing attention and communicating clearly.

The resonance of the value proposition and whether it speaks to the target audience.

The alignment between user expectations (from the landing page) and the prototype experience. A significant gap here is a common cause of activation and retention issues.

Interacting with the Prototype

To be clear, in this section I’m talking about moderated prototype tests.

Rather than assigning specific tasks, I usually let participants explore the prototype as they normally would. This often reveals more authentic usage patterns. As they screenshare, I ask them to narrate their thoughts aloud. I pay close attention to where they stumble, what excites them, and what leaves them cold. Key questions I ask during this phase include:

Which features do you like, and which do you not?

For the features they like, what problem or need does this solve for them?

When was the last time they experienced that problem, and how often do they experience it? How big of a problem is it?

Path 2: Interview → Landing page test 1 → Landing page test 2 → (landing page test 3) → exit interview.

Goal: compare-and-contrast value propositions.

I extend landing page testing to include multiple pages (two or even three) to gain deeper insights:

Measure excitement range: Some participants seem quite excited about solution 1…. Until you show them solution 2!

Drive comparative-and-contrast decisions: Prompting participants to choose a 'winner' and explain their reasoning provides rich qualitative data.

This multi-page strategy is effective for:

Prioritizing pain points: If I’m unsure whether the pain point I’m solving is the most important, I test landing pages addressing the top three potential issues.

Identifying the winning solution to a known pain point: If I’m confident about my #1 pain point, I test different solution approaches for that single problem.

Rapidly generating these diverse landing pages is relatively easy with tools like Lovable. The critical effort, however, is in developing strong solution concepts and their messaging. Luckily ChatGPT, Claude, and Gemini are excellent creative sparring partners.

The exit interview (described below) checks for success metrics, and includes “Pick a winner”.

Path 3: Interview → Prototype test 1 → Prototype test 2 → (Prototype test 3) → Exit interview

Goal: gauge excitement levels for various prototypes. Similar to path 2, except with multiple prototypes.

The exit interview (described below) checks for success metrics, and includes “Pick a winner”.

The transitions between interview, landing page, and prototype test

It's fascinating how some interviewees struggle to articulate their pain points until they see a potential solution, either outlined on a landing page or in a prototype-form.

Sometimes, they'll list a pain point in the interview but show little excitement for the solution I've built for it, only to light up about something completely unexpected.

I actively look for these unexpected patterns and deviations from my initial assumptions. This tells me something about potential latent needs and helps me validate visionary ideas.

The exit overview: Gathering key metrics and uncovering "Why?”

This final segment is designed to capture answers to my success metrics and gather additional insights. For me, these typically include:

"Would you try this product if you found it in the wild?"

"Is this significantly better than what you currently use?"

"How much would you be willing to pay for a solution like this?" It's important to remember that these are self-reported statements and serve as assumptions to be further validated, not definitive proof of willingness to pay.

(“Pick a winner” - in the case of a multi landing page/ multi prototype test)

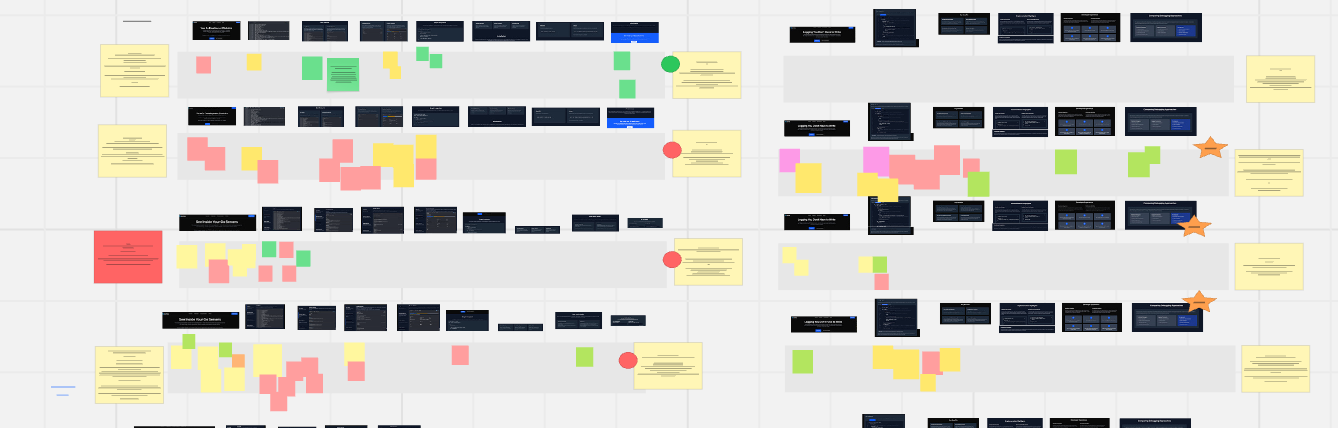

Taking notes: Using Miro for real-time analysis

During each test, I meticulously take notes on a Miro board.

Negative feedback goes on red stickies.

Positive feedback on green stickies.

Neutral or "meh" observations on yellow.

Actions the user took (without explicit sentiment) on grey. I list the profiling questions and their answers on the left side of the board and the exit interview responses on the right. This visual system allows me to quickly zoom out and gauge the overall sentiment of the session by the distribution of colors. I also add notes about whether the participant truly fit the ICP or if there were other important observations.

5. Extracting insights: Beyond the empty "wow"

The real work begins after the interviews are complete. This is when I synthesize the qualitative data into actionable insights.

Checking success metrics: I systematically review the exit interviews to see if my initial success metrics were met. This informs my next steps.

Identifying points of friction and clarity: I go through all the tests to pinpoint where the product or messaging was unclear, confusing, or simply didn't resonate.

Painfully critical self-assessment of enthusiasm: Was the "WOW" a genuine expression of excitement, or merely polite enthusiasm because I was watching over their shoulder? This requires a strong dose of gut feeling and brutal honesty. Presenting multiple prototypes to a single participant can help gauge the breadth of their excitement levels more accurately. I always believe the "No, I don't like it" and take the "Yes, I like it" with a significant grain of salt.

Looking for deeper signals of value:

Excitement and specificity: Phrases like "This would save me 2 hours/week" are far more valuable than a generic "Nice UI." The former indicates a tangible problem being solved.

Signs of real-world pull: I try to tease out real-world behavior wherever possible. For example, instead of asking a hypothetical “Would you recommend this to a friend”, I ask the excited interviewees whether they can make a warm introduction to someone in their network who they believe needs this tool. People tend to overpromise.

This iterative process of preparing, testing, and extracting insights is how I ensure that every product I build is truly solving a problem for real people, rather than just adding another feature to the digital shelf.